Topics and Goals

Welcome to QuantumCLEF (qCLEF), an innovative evaluation lab at the intersection of

Quantum

Computing (QC), Information Retrieval (IR), and Recommender Systems (RS).

In today's data-driven world, IR and RS systems are challenged by the explosive growth of

data and the need for computationally intensive algorithms. Quantum Computing offers new

possibilities to address these demands, and while Quantum Computing has already seen

applications in

various domains, its potential remains largely untapped within IR and RS. The emerging field

of Quantum IR explores quantum mechanics principles to model IR problems but has yet to

explore the practical implementation of IR and RS systems using quantum technologies.

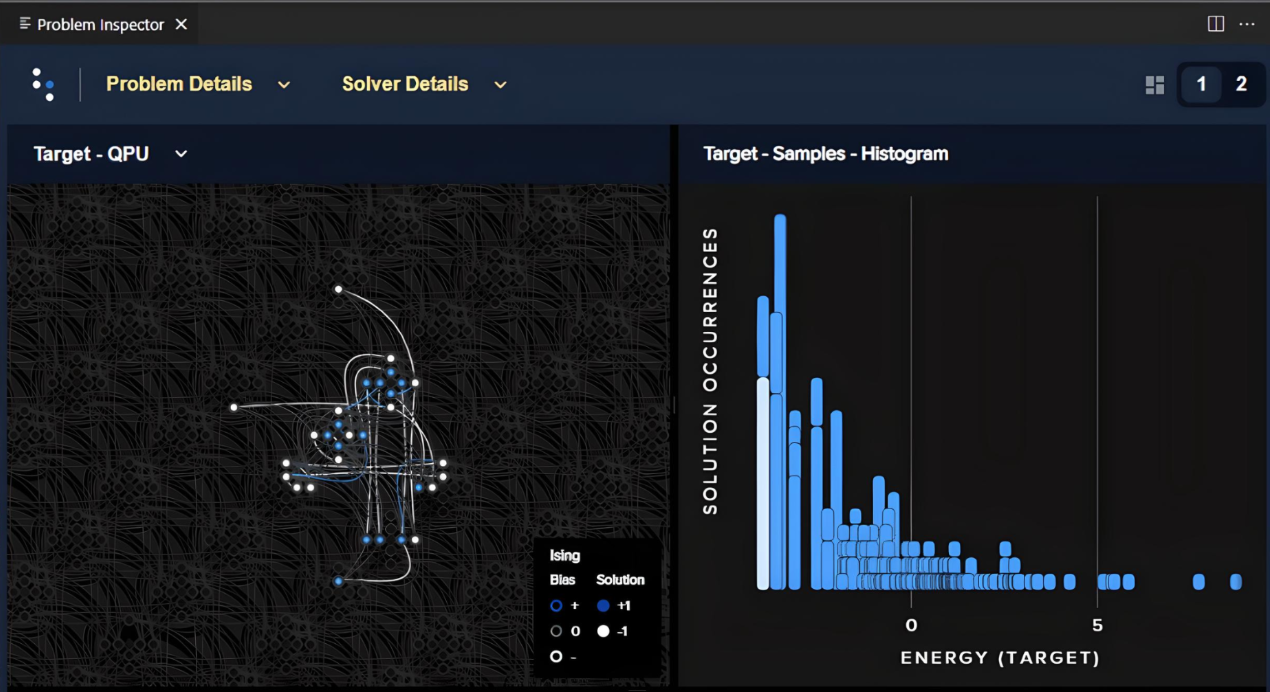

At QuantumCLEF, we focus on Quantum Annealing (QA), a Quantum Computing approach that uses

specialized

devices to efficiently solve complex optimization problems by leveraging quantum-mechanical

effects. Our mission is to explore whether Quantum Annealing can improve the efficiency and

effectiveness of IR and RS systems.

QuantumCLEF aims to:

- Benchmark the performance of Quantum Annealing against traditional approaches in IR and RS;

- Identify novel formulations for IR and RS algorithms that can leverage Quantum Annealing;

- Foster a research community dedicated to applying Quantum Computing technologies in IR and RS.

Quantum Annealing is accessible to researchers with or without a background in quantum

physics, thanks to user-friendly tools and libraries designed for this paradigm. We invite

you to join us in advancing the field and unlocking new capabilities in IR and RS through

Quantum Computing.